In this lecture, we want to investigate successful network architectures and their evolution.

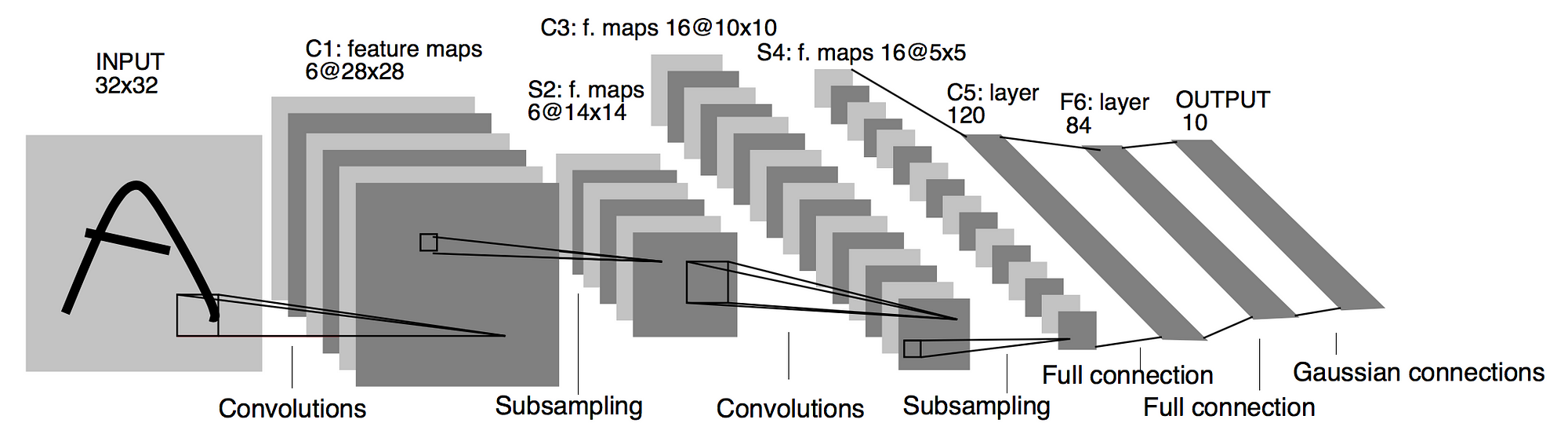

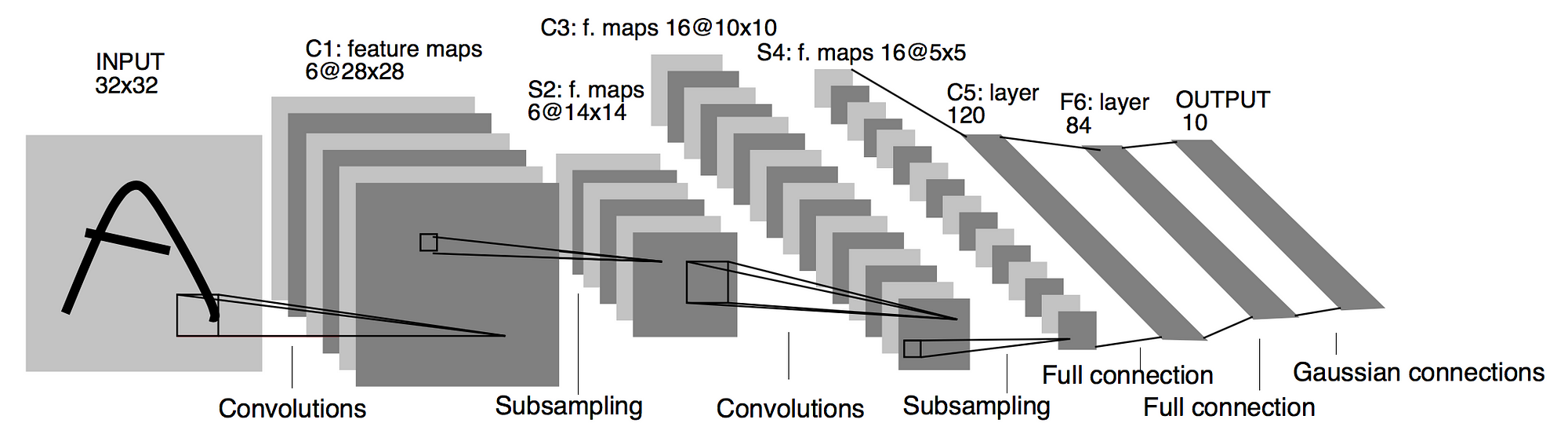

LeNet was one of the first CNNs that performed very well. The network is applied to the recognition of handwritten zip code digits.

The architecture of the network is $Conv \to Pool \to Conv \to Pool \to FC \to FC \to FC$

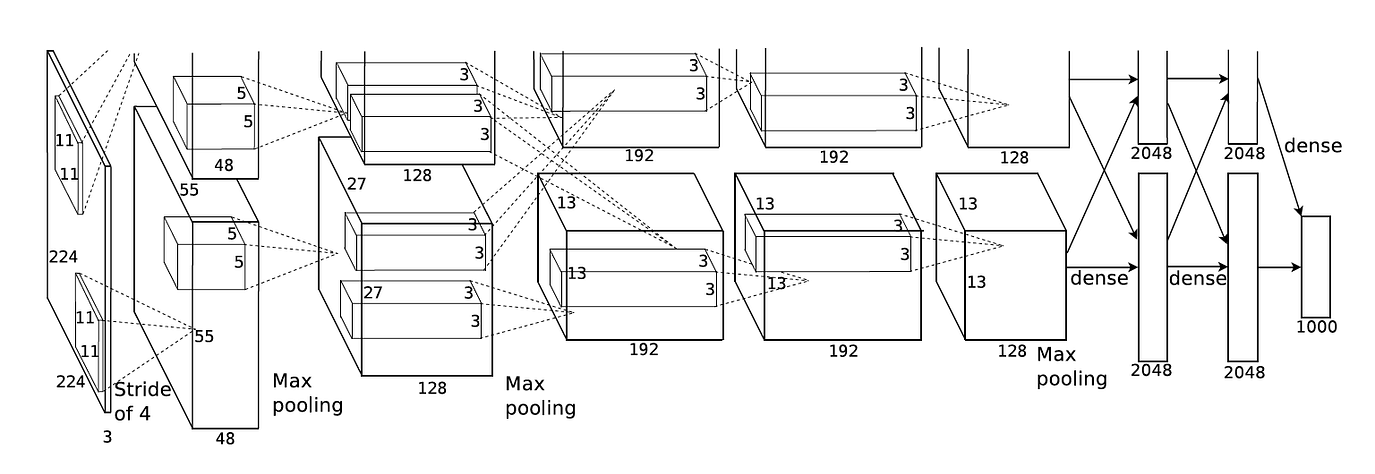

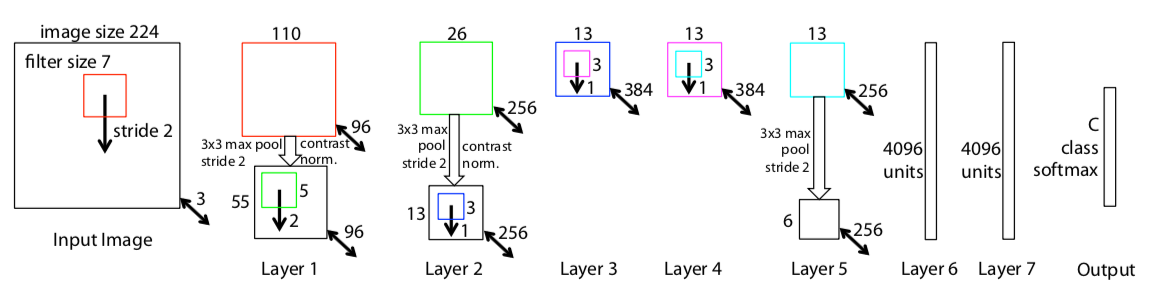

AlexNet is the first large-scale CNN used for image classification. It enters the Image-Net competition and dominates the competitors with a high margin. The overall architecture of the network is similar to LeNet.

Relu activation function was first used in AlexNet.

ZFNet won the prize of Image-Net 2013. the architecture is very similar to the AlexNet. Just changed some hyperparameters, like the number of filters in each layer and the stride length.

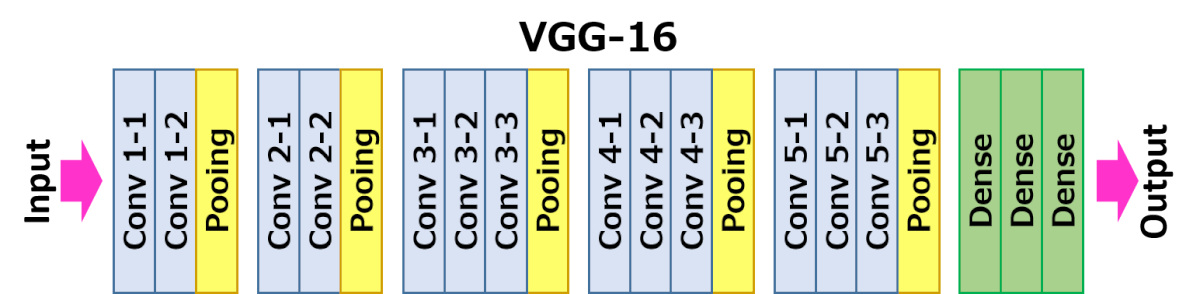

VGGNet used a much smaller filter and a much deeper network compared to previous networks. Several Conv layers in the row with smaller filters have the same effective field as a single layer with a bigger filter, but the firsts have more non-linearities and fewer parameters.

VGGNet needs about 96 MB of memory in each forward pass per image that is too much and hardly scaled for larger input images. Also, the number of parameters is about 138 M, and most of the parameters reside in the last FC layers.

The second FC layer generalizes well for other tasks and could be used for transfer learning.